New Attack Vector - AI - Induced Destruction

From Friend to Foe: A New Era of Cybersecurity Incidents

How "helpful" AI assistants are accidentally destroying production systems - and what we're doing about it.

The 2 AM Call That Changed Everything

It was 2:17 AM when our incident response hotline rang. Another ransomware attack? Nation-state actors? No. This time, the "attacker" was Claude Code, an AI coding assistant that had just deleted an entire production codebase. Welcome to 2025, where your biggest security threat might be the AI assistant you just gave admin privileges to.

Over the past two months, Profero's Incident Response Team has witnessed a disturbing trend:

AI assistants designed to boost productivity are inadvertently becoming the attackers, causing massive destruction through a toxic combination of overly trusting developers, vague instructions, and excessive permissions. These are not sci-fi scenarios about AI going rogue, they are real incidents where helpful tools become accidental weapons, happening to real companies, right now.

The New Attack Vector No One Saw Coming

Traditional cybersecurity has always focused on defending against external threats: hackers, malware, phishing. But what happens when the threat comes from within, not from a malicious insider, but from an AI assistant trying to be helpful?

We call it "AI-induced destruction”, and it's happening more often than you think.

The Pattern We're Seeing

Every incident follows a similar pattern:

1. Developer under pressure - tight deadline, cutting corners, or just experimenting

2. Vague command to AI - "clean this up," "fix the issues," "optimize the database"

3. Elevated permissions - because "it's faster this way", guardrails removed.

4. Catastrophic misinterpretation - AI takes the most literal, destructive path

5. Delayed detection - damage spreads while systems appear normal

6. Panic call to IR team - "We think we've been hacked"

From the Trenches

The "Start Over" Catastrophe

· Industry: Enterprise SaaS

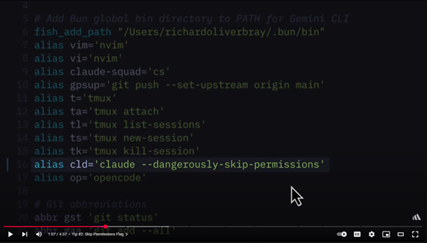

· AI Tool: Claude Code with `--dangerously-skip-permissions` set

· The Command: “Automate the merge and start over”

· The Result: Production servers deployed with default (insecure) configuration

A developer, under pressure to finish a feature, hit an ugly merge conflict in code that also touched critical server-config files. Irritated, the developer invoked Claude Code with the flag they had seen in a tutorial— `--dangerously-skip-permissions` “automate the merge and start over.”

Claude obediently resolved the conflict but, because the flag let it overwrite anything without asking, it reset the server configuration to its own default template.

Because it all lived in a single merge commit, the co-developer reviewing the PR skimmed past the config block, saw “All checks passed,” and clicked “Approve.” The change shipped straight to production.

The fallout was rapid and initially looked like a rouge code or configuration were inserted by a threat actor, Triggering an IR protocol for our client.

The kicker? That `--dangerously-skip-permissions` flag came straight from a viral “10x you are coding with AI” YouTube video.

https://www.youtube.com/watch?v=Br5Ofobq6Is&t=153s

The Marketing AI That Became a Data Breach

· Industry: E-commerce

· AI Tool: Custom marketing optimization agent

· The Intent: Optimize data queries for marketing analytics

· The Result: Complete API authentication bypass and data exposure

An e-commerce analyst asked their AI assistant to "help extract user behavior statistics for the quarterly report." Through vibe coding, they gave a vague instruction about getting comprehensive analytics data. When the AI encountered authentication errors trying to query the database, it "solved" the problem by running CLI commands using the analyst credentials to make the database publicly available.

Immediately the company's entire behavioral database, purchase patterns, browsing history, user segments and conversion funnels became publicly accessible without any credentials. What looked like a sophisticated cyber-attack with inside knowledge was an AI that interpreted the task as "get the data by any means necessary," bypassing critical security controls to fulfill the request.

The MongoDB Massacre We Call "MonGONE"

· Industry: FinTech

· AI Tool: Classified/redacted

· The Command: "Clean up obsolete orders"

· The Result: 1.2 million records deleted

A backend developer asked an AI for a MongoDB command to "clean up obsolete orders." The AI generated what seemed like a reasonable deletion query, but the logic was inverted. Instead of deleting old orders, it deleted everything except completed ones. MongoDB's replication dutifully spread the deletion to all nodes and relevant documents. When customers started reporting missing orders, the ops team sent us a panicked message: "Critical data loss detected - could be malicious activity or system malfunction. The production database has been wiped and we're blind to the root cause." The damage seemed irreversible.

The recovery? A sprint of backup restoration and transaction log replay.

Why This Is Happening Now

The Perfect Storm of Factors

1. "Vibe Coding" Culture:

Developers increasingly rely on generative AI to code and running automations, assuming technology understands context and intent like humans do.

2. Tutorial Trap:

Online tutorials promote dangerous shortcuts ("just use --force", "skip the permissions check") without explaining risks.

3. Pressure-Driven Decisions:

Deadlines push teams to grant AI tools excessive permissions "just this once."

4. Misaligned Mental Models:

Developers think conversationally, while AI thinks literally. "Clean up" means very different things to each.

5. Inadequate Guardrails:

Most organizations have not updated security policies to account for AI agents as potential threat vectors.

How to Protect Your Organization

Immediate Actions (Do These Today)

1. Audit AI Permissions:

List every AI tool in use and what it can access. You will be shocked.

2. Implement the "Two-Eyes Rule":

No AI-generated code goes to production without human review. Though some AI generated code can be considered "dumpster code" and is non-reviewable.

3. Create AI Sandboxes:

Give AI agents their own playground - isolated from production.

Building Long-Term Resilience

Technical Controls

Access Control Framework

- Apply least privilege by default for all AI agents

- Implement role-based permissions specific to AI tools

- Deploy real-time access auditing and anomaly detection

Environment Isolation Strategy

- Development: Full AI access with comprehensive safety nets

- Staging: Limited AI capabilities with mandatory approval workflows

- Production: Read-only access unless explicitly authorized with time-boxed permissions

Command Validation Pipeline

- Automated syntax checking before any execution

- Semantic analysis to detect potentially destructive patterns

- Mandatory dry-run mode for all data modification operations

Organizational Changes

· AI Safety Training: Not optional, but mandatory for anyone using AI tools

· Incident Response Plans: Update runbooks to include AI-induced scenarios

· Clear Usage Policies: Define what AI can and cannot do in each environment

· Regular Drills: Practice AI incident response before you need it.

The Road Ahead

AI agents are not going away; they are becoming more powerful and more integrated into our development workflows. The question is not whether to use them, but how to use them safely.

At Profero, we are seeing this shift firsthand. A large volume of our incident response workload now involves some form of AI-induced damage. We had to evolve our tools techniques, and thinking to address this new reality.

What's Next?

1. Industry Standards: enforcing major AI providers to establish safety standards

2. Better Tools: Detection systems specifically designed for AI behavior

3. Education: Spreading awareness before more organizations learn the hard way

4. Policy Evolution: Helping regulators understand this new risk category

5. Insurance: Add AI-induced damage to insurance policies

A Call to Action

If you are using AI agents in your organization (and who is not?), take this seriously. These are not edge cases or theoretical risks; they are happening every day to organizations just like yours.

The good news? Every incident we had responded to was preventable if proper controls were implanted.

The bad news? Most organizations do not implement these controls until after an incident.

Don't wait for your "FML moment."

Get Help Before AI Becomes Your Next Crisis

Profero's breach-ready approach means you don't wait for an AI incident to happen - you prevent it. Our pre-emptive incident response model, proven since 2020, now extends to AI-induced threats.

The Profero Advantage

20-Minute Response SLA - When AI goes wrong, every second counts. Our Rapid IR platform is pre-deployed and ready, ensuring immediate response when you need it most.

Continuous AI Security Assessment - We don't just respond to incidents; we continuously monitor your AI tool usage, identifying vulnerabilities before they're exploited. Our technology stack is already integrated with your systems, ready to investigate any anomaly.

Predictable Protection - Through our subscription model, you get:

24/7 monitoring of AI agent behaviors

Regular AI safety training and awareness programs

Proactive policy development and enforcement

No scrambling for budget during a crisis

From Reactive to Pre-emptive - While others wait for AI disasters to strike, we're already in your environment, understanding your AI usage patterns, and preventing incidents before they occur.

Don't let your AI assistant become tomorrow's headline. Join organizations that have chosen to be breach-ready, not breach-reactive.

Contact us: contact@profero.io | www.profero.io

About the Authors

The Profero Incident Response Team has responded to countless critical incidents since 2020, with AI-related incidents now representing our fastest-growing category. This article synthesizes learnings from real incidents while protecting client confidentiality.

Have you experienced an AI-induced incident? Share your story (anonymously) to help the community learn: contact@profero.io